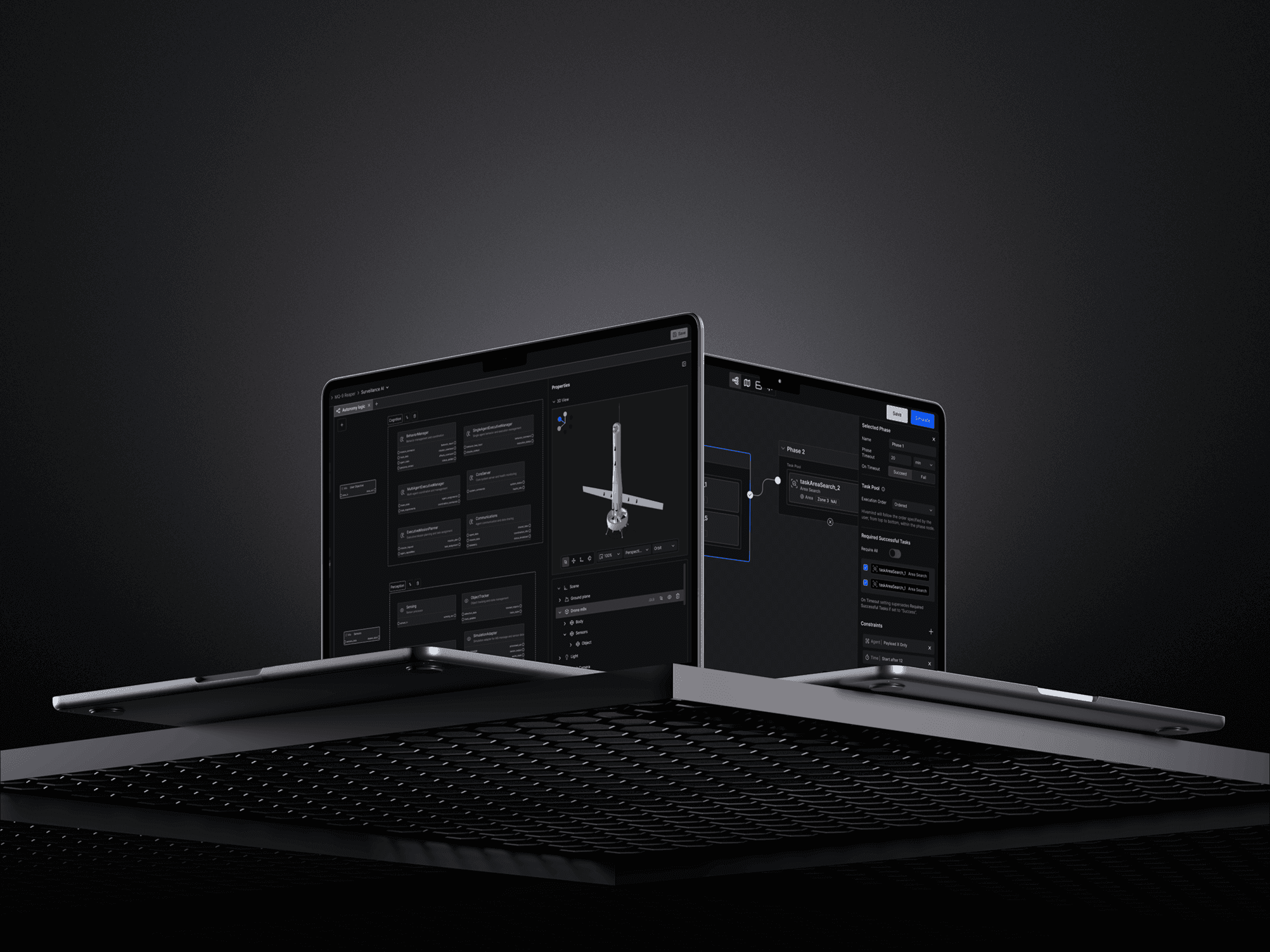

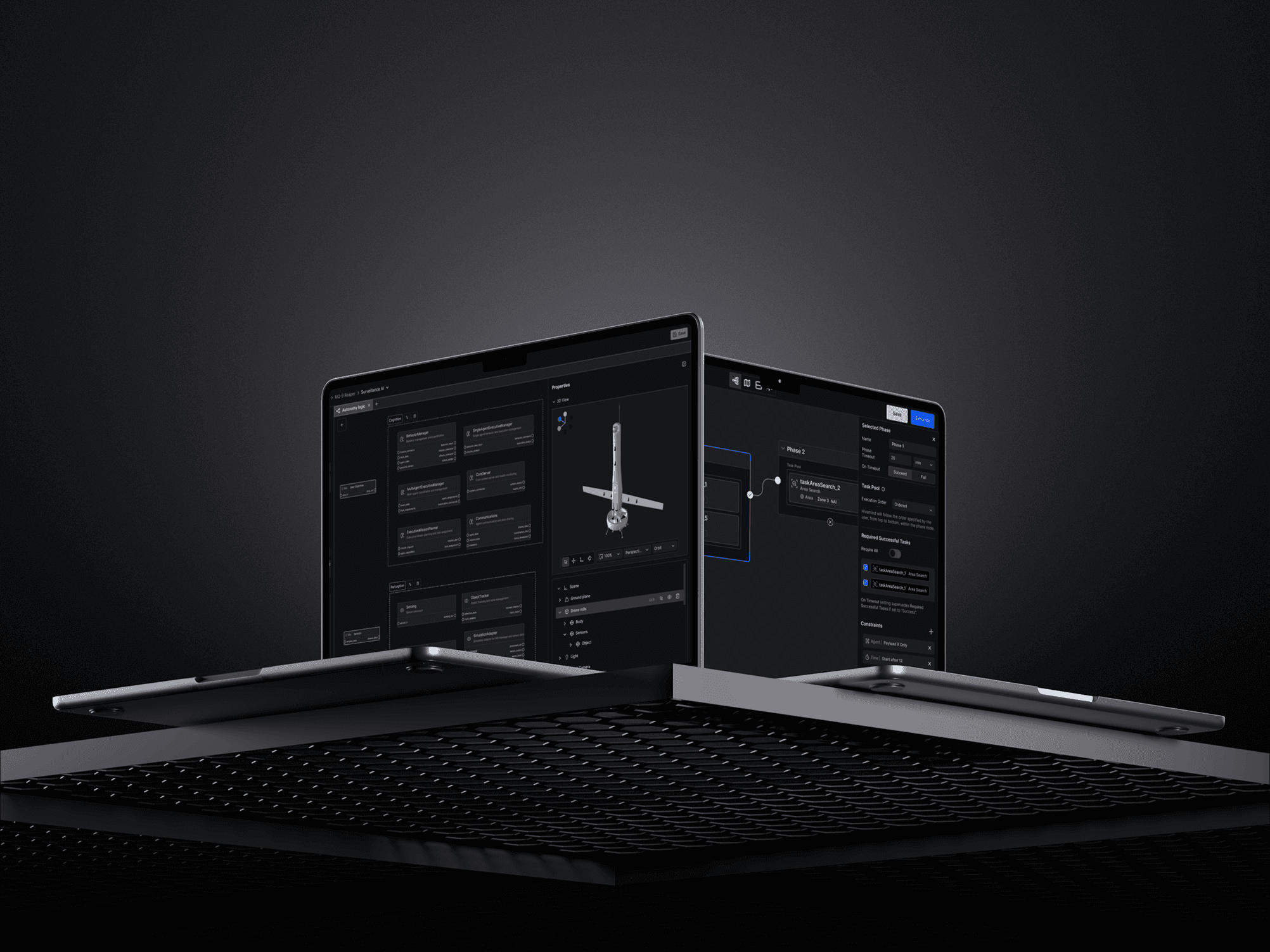

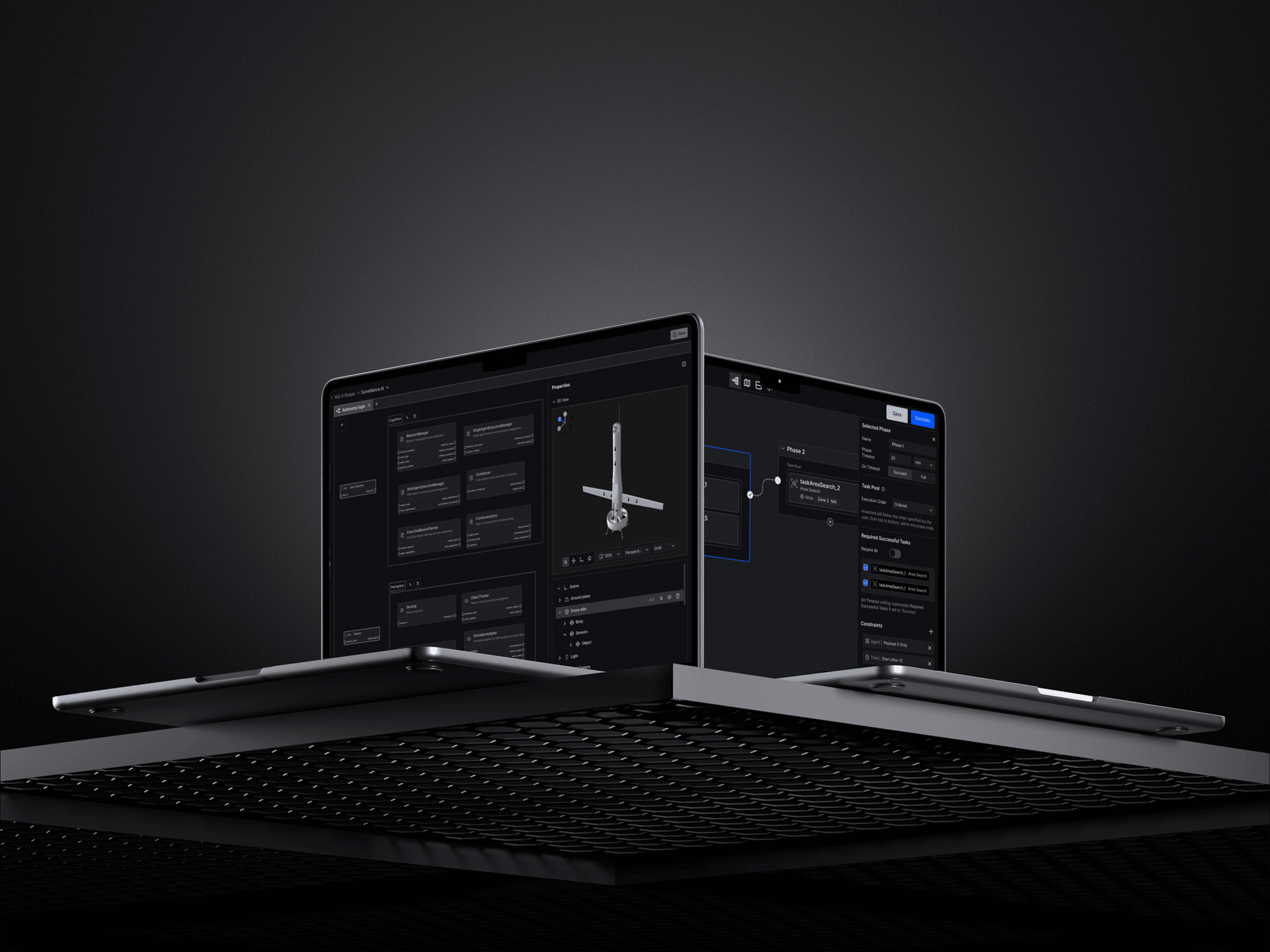

Hivemind Autonomy Platform

The AI-powered, modular autonomy software platform for rapid development and deployment to intelligent machines.

The Challenge

Shield AI's Hivemind autonomy platform enables engineers to develop, test, and deploy AI pilots for autonomous aircraft across defense and commercial aviation. The platform supports the entire autonomy lifecycle, from simulation and testing to hardware-in-the-loop validation and live operations.

The problem is that autonomy development is inherently complex. Engineers need to design scenarios, configure AI agents, run simulations, analyze metrics, and deploy to physical systems, all while maintaining clarity about what's happening and why. Without thoughtful design, users either drown in technical complexity or lose trust in the system.

The core design challenge: how do you make mission-critical autonomy development clear, trustworthy, and fast without oversimplifying the sophisticated workflows that domain experts require?

Approach

I joined Shield AI to build the product design function from the ground up. This meant establishing both the team structure and the design philosophy that would guide how we solve problems.

Building the Team

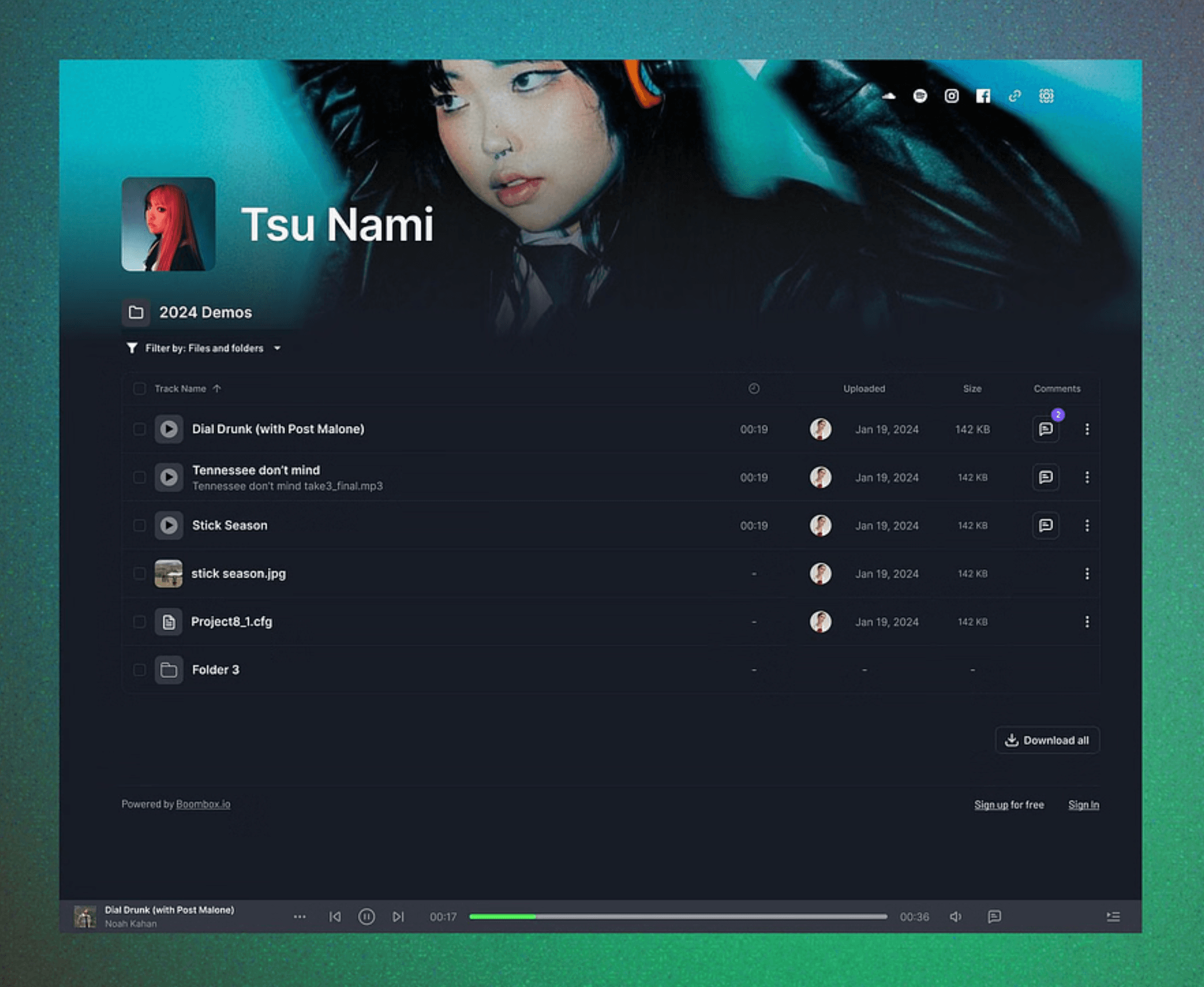

I structured the design organization around the autonomy lifecycle. We have designers focused on development (scenarios, agents, missions), testing (metrics, dashboards, analysis tools), and operations (HIL, ground control, deployment). Each designer owns end-to-end experiences within their domain while collaborating across the full user journey. I also embedded design systems and research as core functions from day one.

Discovery-Led Design

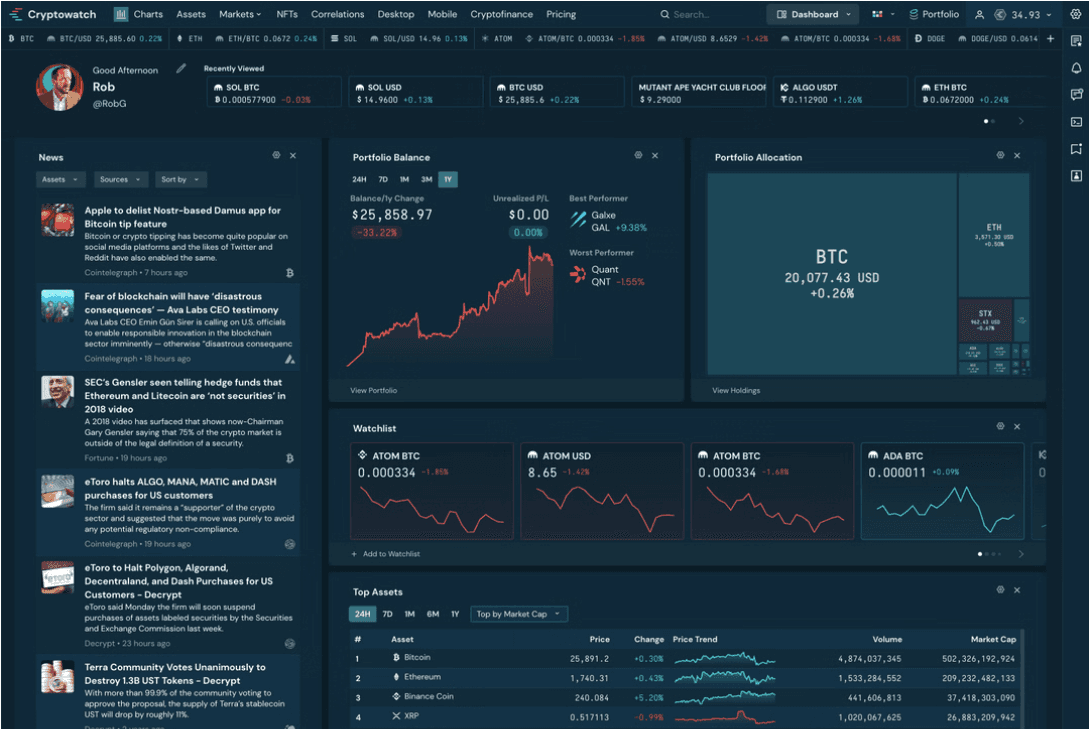

Autonomy engineering is a specialized domain. Early on, I led extensive user research through interviews and shadowing sessions with autonomy engineers, test operators, and domain experts. These deep dives revealed that the biggest friction wasn't lack of features. It was cognitive overload and opacity. Users needed to understand system state, trace decisions, and move quickly between development phases without losing context.

Design Principles as North Star

Rather than design by feature request, we established five core principles that guide every decision. Clarity means organizing complexity through hierarchy and progressive disclosure. Trust comes from making system behavior transparent and traceable. Insight turns data into actionable understanding. Velocity minimizes friction in iteration loops. And craft means sweating the details because trust compounds from quality.

These principles aren't aspirational. They're decision-making tools. When evaluating designs, we ask: does this increase clarity? Does it build trust? Does it help users move faster?

Solution Highlights

Impact & Results

Increased Adoption Across the Autonomy Lifecycle

By adding key workflows like SIL/HIL testing, simulation environments, and agent configuration tools, we expanded platform usage across the full development cycle. Engineers now iterate faster because they can move seamlessly between design, test, and deployment without switching tools.

Faster Iteration Loops

Reduced cognitive load and streamlined information architecture enable autonomy engineers to run more test cycles per day. When users can quickly understand test results and trace system behavior, they spend less time debugging and more time improving AI performance.

Team & Operating Model

Built a cross-functional design team with embedded roles in product squads. Established a discovery-driven process where design co-owns outcomes with product and engineering. No designs thrown over the wall. The team now operates with consistent principles, shared components, and a culture of craft.

Reflections

Designing for domain experts requires humility. Early on, I learned that autonomy engineers don't need simplification. They need organization. The goal isn't to hide complexity but to structure it so users can navigate confidently.

The biggest insight: trust isn't built through polish alone. It's built through transparency. When engineers can trace why a decision was made or why a test failed, they trust the system to handle mission-critical work. That's where design principles like trust and insight become essential. Not just philosophical, but tactical.

If I were starting over, I'd invest even more in early prototyping with real users in the loop. We learned the most when engineers could interact with working prototypes during testing sessions, not just static mocks. That feedback loop of watching domain experts use the tools under real conditions revealed edge cases and workflow friction we'd never have anticipated.

Want to learn more about this work? Unfortunately, detailed visuals and specifics are under NDA, but I'm happy to discuss the design process, team structure, and strategic decisions in more depth.